Prometheus中指标,Servicemonitor,Targets的关系

Prometheus下拉列表里面的指标是怎么来的?

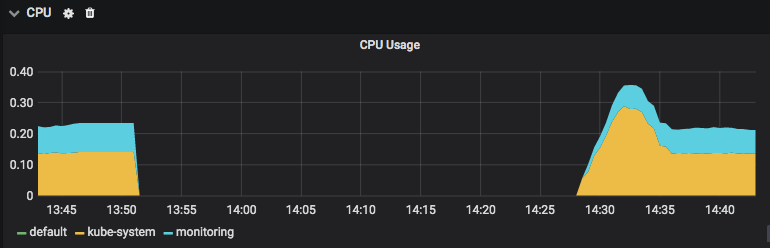

如果安装了alert-manager, kube-state-metrics这样组件,这些组件会提供/metrics接口,然后Prometheus就可以拉取这些接口,从而获取指标数据,便展示于Prometheus

Dashboard的下拉列表里面了。当然,在指标里面有可能还会加入一些标签,如job, instance之类的。

网上有人说Servicemonitor是exporter的抽象,我觉得是不对的。最多抽象了一部分而已。

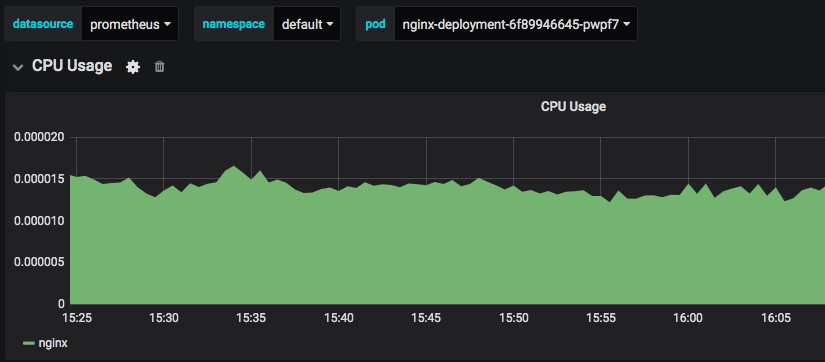

一个Servicemonitor对应n个Targetes里面的记录。

当你删除Servicemonitor对象,在Targets页面,这个特定的Target肯定是不显示了,但并不代表Prometheus下拉列表中的指标会消失,指标还是存在的。只是有可能有些标签变化了,这个标签变化的还没有经过验证。

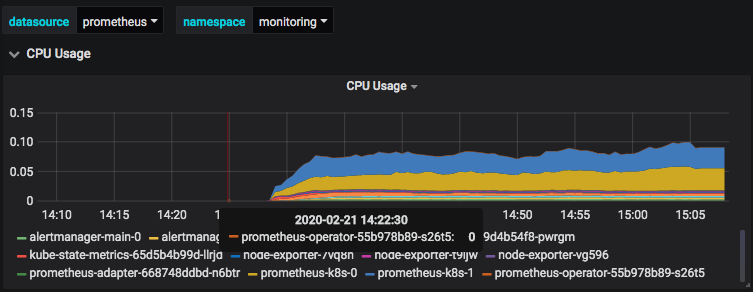

当你删除Pod时,比如把alert-manager的deployment删除,对应的Pod自动删除,这时,下拉列表对应的指标还存在,但却没有值了。

这个时候,如果把Prometheus重启一下,这个指标才会消失。

把kube-state-metrics的pods删除后,kube-开始的指标,如:kube_pod_info,就没有值了。

把node-exporter的daemonset删除后,node_cpu_seconds_total节点的指标就没值了。