这一篇文章,通过kubernetes_sd_configs配置服务自动发现。

还原来一样,是通过kube-prometheus安装的k8s监控系统。

和之前由kubernetes-operator已经提供的yaml文件不同,这次我们需要新建一个yaml文件配置。

prometheus-additional.yaml内容如下,注意,这里自动发现的role设置为pod。通过它,将自动发现k8s集群里面所有的pod。

1 | - job_name: 'kubernetes-cadvisor' |

然后还需要修改prometheus-prometheus.yaml的内容,在spec项下级,serviceAccountName标签前面添加additionalScrapeConfigs标签,如下:

1 | spec: |

删除之前的secret,如果有的话。

1 | kubectl delete secret additional-configs -n monitoring |

第一次新建当然是没有的,所以直接创建secret:

1 | kubectl create secret generic additional-configs --from-file=prometheus-additional.yaml -n monitoring |

然后应用prometheus的配置,因为做过修改。

1 | kubectl apply -f prometheus/prometheus-prometheus.yaml |

稍等片刻后,我们可以看到Prometheus Configuration设置已经变化。已经有job_name为kubernetes-cadvisor的记录了。kubernetes-cadvisor部分内容如下:

1 | - job_name: kubernetes-cadvisor |

但奇怪的是,我们在Targets页面并没有发现kubernetes-cadvisor这个Target。

1 | kubectl get po -n monitoring |

查看pod,未发现异常。

查看一下Pod日志。kubectl logs -f prometheus-k8s-0 prometheus -n monitoring

level=error ts=2020-03-05T02:56:36.493Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth

msg=”/app/discovery/kubernetes/kubernetes.go:263: Failed to list v1.Endpoints: endpoints is forbidden: User \”system:serviceaccount:monitoring:prometheus-k8s\” cannot list resource \”endpoints\” in API group \”\” at the cluster scope”

level=error ts=2020-03-05T02:56:37.491Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg=”/app/discovery/kubernetes/kubernetes.go:264: Failed to list v1.Service: services is forbidden: User \”system:serviceaccount:monitoring:prometheus-k8s\” cannot list resource \”services\” in API group \”\” at the cluster scope”

level=error ts=2020-03-05T02:56:37.494Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg=”/app/discovery/kubernetes/kubernetes.go:265: Failed to list *v1.Pod: pods is forbidden: User \”system:serviceaccount:monitoring:prometheus-k8s\” cannot list resource \”pods\” in API group \”\” at the cluster scope”

很多出错日志,主要是上面三条的循环出现。

Failed to list *v1.Endpoints: endpoints is forbidden: User \”system:serviceaccount:monitoring:prometheus-k8s\” cannot list resource \”endpoints\” in API group \”\” at the cluster scope.

Failed to list *v1.Service: services is forbidden: User \”system:serviceaccount:monitoring:prometheus-k8s\” cannot list resource \”services\” in API group \”\” at the cluster scope.

Failed to list *v1.Pod: pods is forbidden: User \”system:serviceaccount:monitoring:prometheus-k8s\” cannot list resource \”pods\” in API group \”\” at the cluster scope.

endpoints/services/pods is forbidden,说明是RBAC权限问题,namespace: monitoring下的serviceaccount: prometheus-k8s这个用户没有权限。

查看prometheus-prometheus.yaml内容,可以看到Prometheus绑定了一个名为prometheus-k8s的serviceAccount对象。

1 | apiVersion: monitoring.coreos.com/v1 |

通过查看prometheus-clusterRole.yaml得知,名为prometheus-k8s的serviceAccount对象绑定的是一个名为prometheus-k8s的ClusterRole。

绑定关系在prometheus-clusterRoleBinding.yaml这个文件。

1 | apiVersion: rbac.authorization.k8s.io/v1 |

查看这个Role:

1 | apiVersion: rbac.authorization.k8s.io/v1 |

在上面的权限规则中,并未发现对endpoints/services/pods的list权限。所以做如下修改:

1 | apiVersion: rbac.authorization.k8s.io/v1 |

应用一下

1 | kubectl apply -f prometheus-clusterRole.yaml |

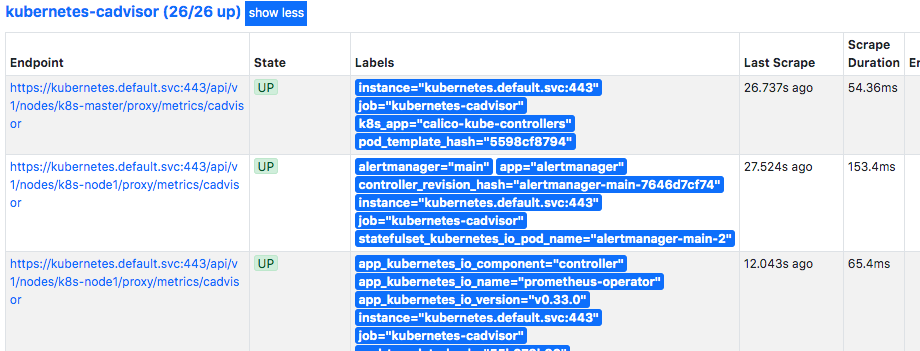

这时就可以看到Targets了。

我们分析一下第二个Endpoint为:https://kubernetes.default.svc:443/api/v1/nodes/k8s-node1/proxy/metrics/cadvisor

在node节点上是无法解析kubernetes.default.svc的,所以我们先获取这个域名的真实地址。

1 | kubectl get svc |

再通过secret获取到token,就可以访问url以获取指标了。

1 | curl -k https://10.96.0.1:443/api/v1/nodes/k8s-node1/proxy/metrics/cadvisor -H "Authorization: Bearer |

指标以container_开始。和我们之前讨论的kubelet/1的指标完全一样。Prometheus monitoring/kubelet监控指标

所以其实是可以通过修改kubelet/1的配置来达到新增这个Target的目的。但kubelet/1的目的主要是监控kubelet的情况,其他的Pod它不监控,Target是没有被keep的。如下图:

1 | relabel_configs: |

我将指标存入kubernetes-cadvisor-1.txt 点击查看,供下载查看。