IntelliJ IDEA新建Maven Springboot项目Cannot find JRE '1.8'

通过 IntelliJ IDEA 新建一个 Maven Springboot 项目,建立好之后,发现生成代码的包无法引入,代码已经提示红色标记错误。

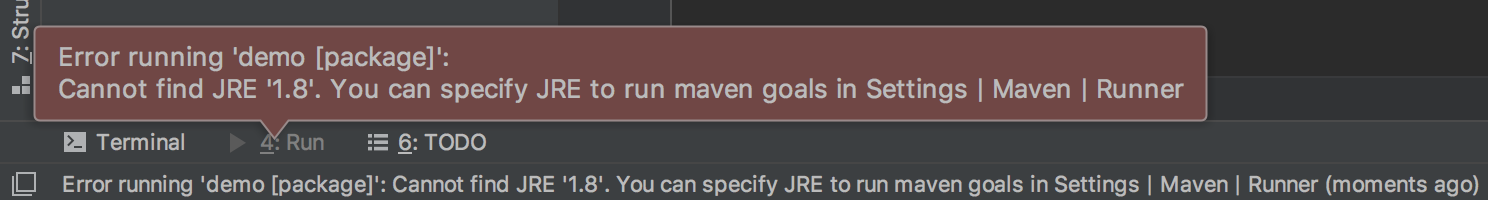

点击按钮 Reimport All Maven Projects 或执行 maven clean, package 这类命令,都会提示如下错误:

Error running 'demo [validate]': Cannot find JRE '1.8'. You can specify JRE to run maven goals in Settings | Maven | Runner

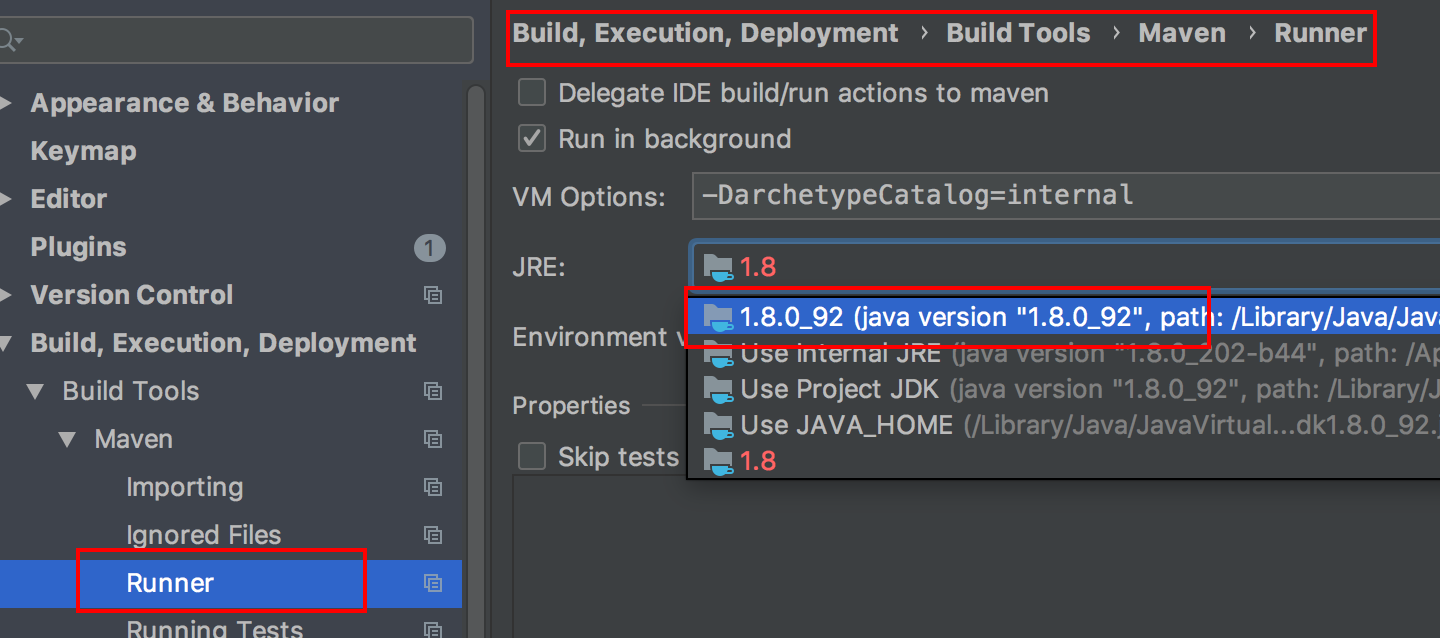

按上述提示,进入 Settings | Maven | Runner,把正常的 JRE 环境选中即可。

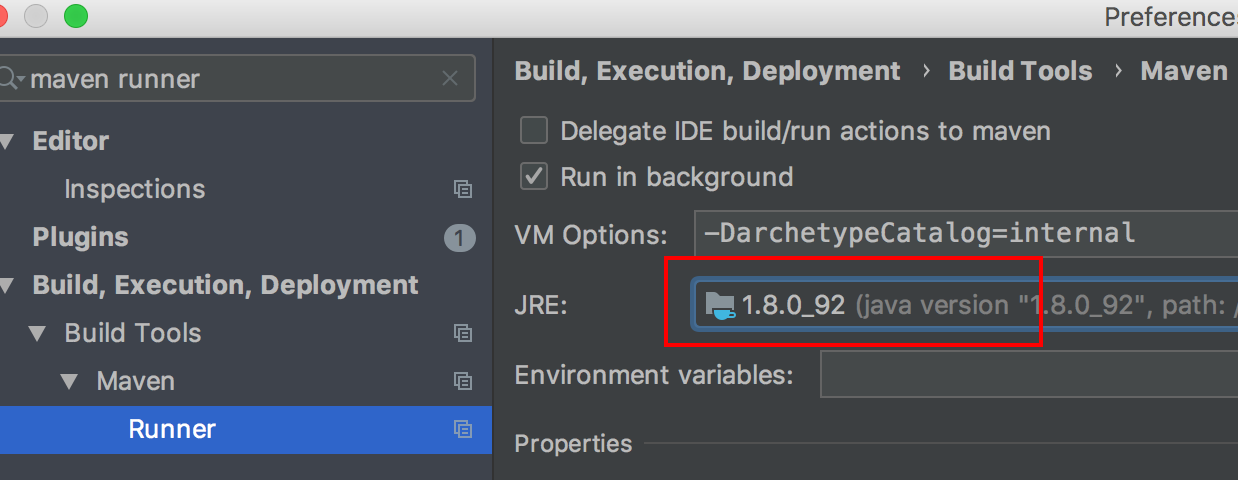

上面是设置当前项目的,如果要设置以后所有新建的项目,则需要关闭所有项目后,再进入 Preferences

pandas.apply()的axis参数

1 | DataFrame.apply(func, axis=0, broadcast=None, raw=False, reduce=None, result_type=None, args=(), **kwds) |

func : Function to be applied to each column or row. This function accepts a series and returns a series.

我们往往对 axis 搞不太清楚。

axis : Axis along which the function is applied in dataframe. Default value 0.

- If value is 0 then it applies function to each column.

- If value is 1 then it applies function to each row.

args : tuple / list of arguments to passed to function.